WebSockets

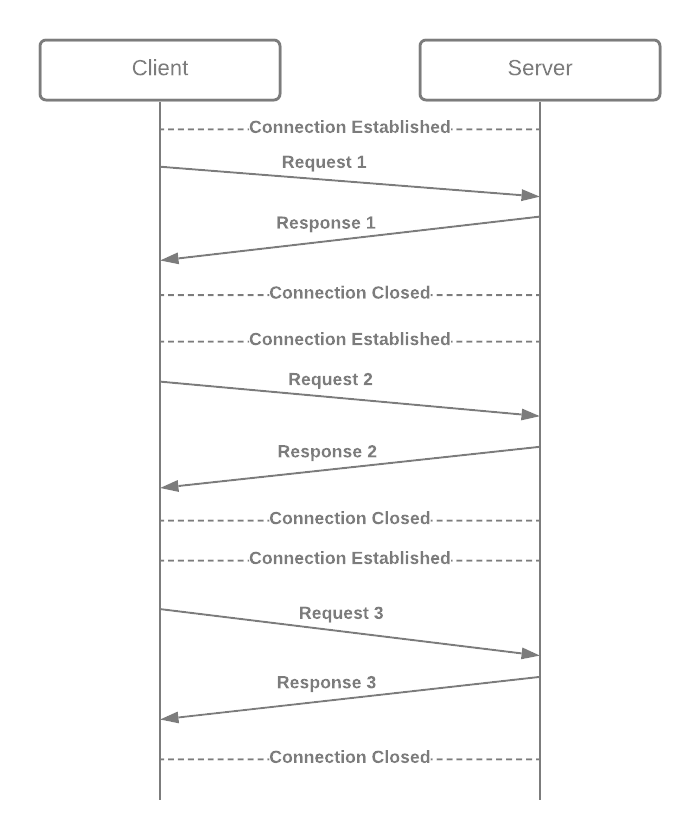

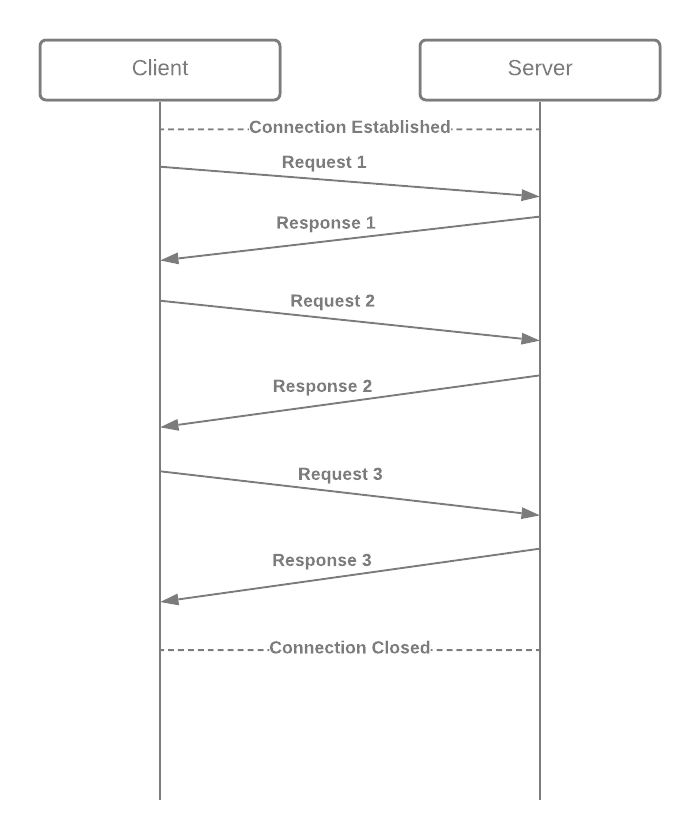

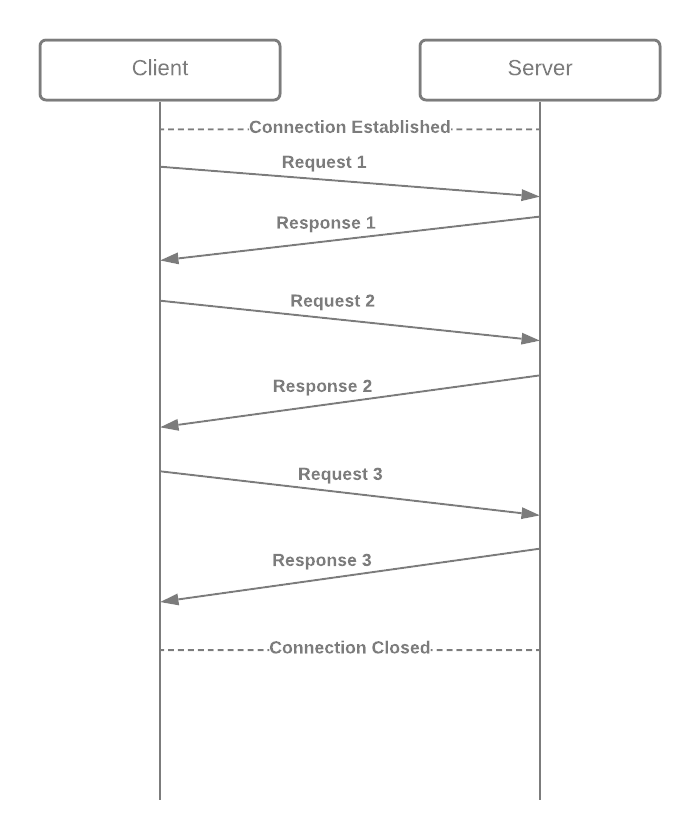

A normal flow between client and server over HTTP connection is made of Requests and Responses.

The client sends a response to the server and then the server sends back the response. Now there can be use cases where the server would have to send data to the client, for example, a chat application or a stock market tracker. In such scenarios, where the server needs to send data to the client at will, WebSocket communication protocol can solve the problem.

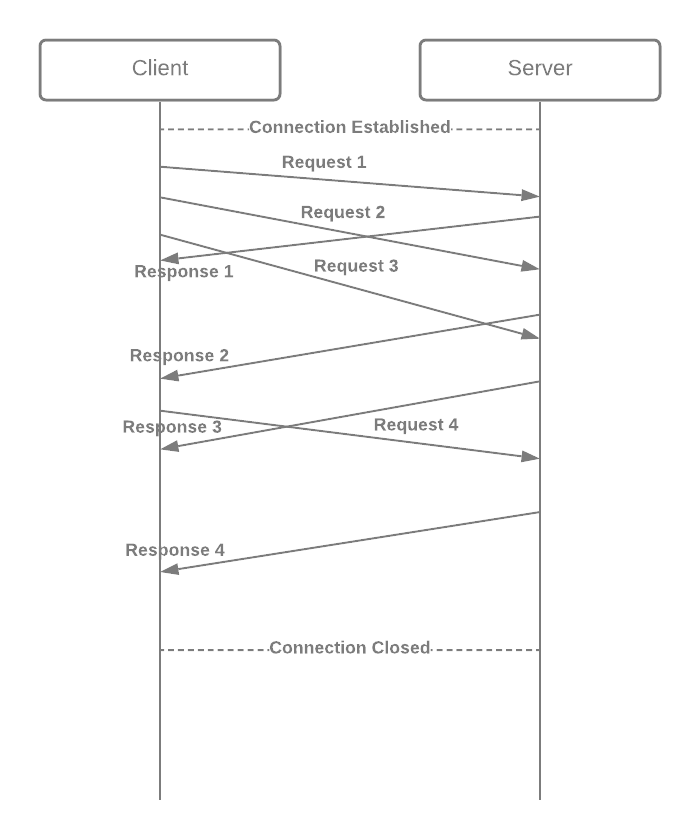

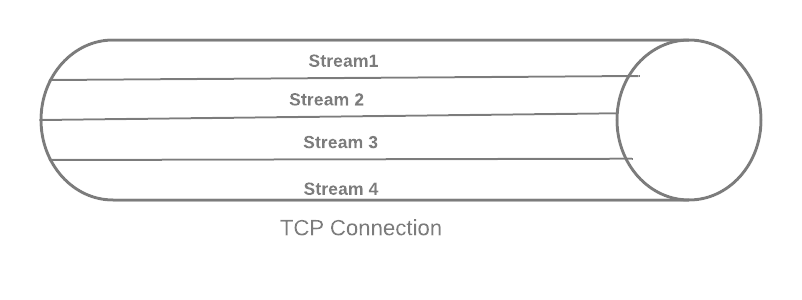

WebSocket provides full-duplex communication channels over a single TCP connection. Both HTTP and Websocket protocols are located at layer 7 in the OSI model and depend on TCP at layer 4.

Websocket Connections string looks like ws://some.example.com or for secured wss://some.example.com

To achieve the communication, the WebSocket handshake uses the HTTP Upgrade header to change from the HTTP protocol to the WebSocket protocol.

WebSocket handshake

- The client sends a request for “upgrade” as GET 1.1 upgrade

- The server responds with 101- Switching protocols

Once the connection is established, the communication is duplex, and both client and server and sent messages over the established connection.

Challenges with Websockets

- Proxy is difficult at Layer 7. It will mean 2 levels of WebSocket connectivity, client to proxy and then proxy to backend.

- Layer 7 load balancing is difficult due to the stateful nature of communication.

- Scaling is tough due to its stateful nature. Moving the connection from one installed backend to another would mean resetting the connection.