Istio- Getting started

I recently wrote about service mesh and how it helps ease managing and deploying services. Istio is an open-source service mesh that layers transparently onto existing distributed applications. Istio helps to create a network of deployed services with load balancing, service-to-service authentication, monitoring with no additional coding requirements. Istio deploys a sidecar for each service which provides features like canary deployments, fault injections, and circuit breakers off the shelf.

Let’s take a look at Istio at a high level

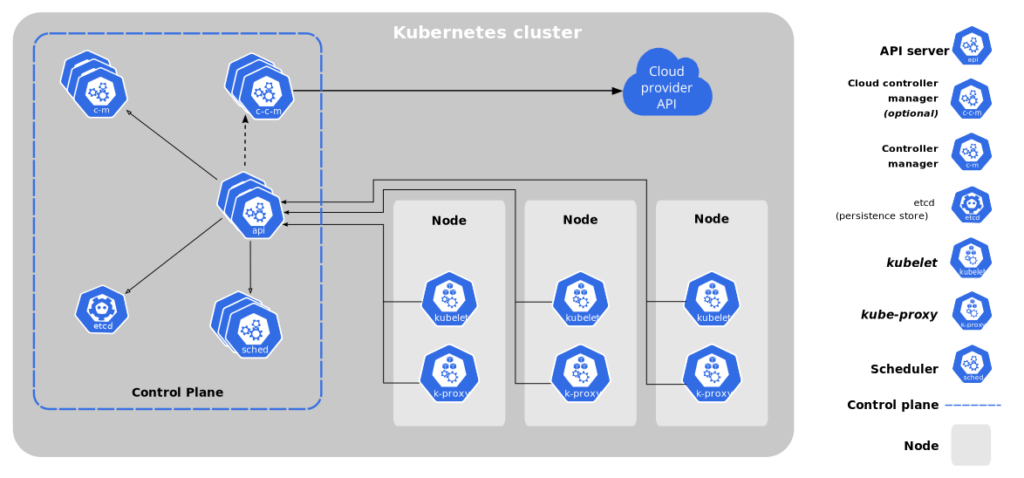

Data Plane: as we can see in the design above that Data Plane uses sidecars through Envoy Proxy and manage traffic for microservices.

Control Plane: Control plane helps to have centralized control over network infrastructure and implement policies and traffic rules.

Control plane functionality (https://istio.io/latest/docs/concepts/what-is-istio/)

Automatic load balancing for HTTP, gRPC, WebSocket, and TCP traffic.

Fine-grained control of traffic behavior with rich routing rules, retries, failovers, and fault injection.

A pluggable policy layer and configuration API supporting access controls, rate limits and quotas.

Automatic metrics, logs, and traces for all traffic within a cluster, including cluster ingress and egress.

Secure service-to-service communication in a cluster with strong identity-based authentication and authorization.

Currently Istio is supported on

- Service deployment on Kubernetes

- Services registered with Consul

- Services running on individual virtual machines

Lets take a look at core features provided by Istio

Traffic management

Istio helps us manage traffic by implementing circuit breakers, timeouts, and retries, and helps us with A/B testing, canary rollouts, and staged rollouts with percentage-based traffic splits.

Security

Another core area where Istio helps is security. It helps with authentication, authorization, and encryption off the shelf.

While Istio is platform-independent, using it with Kubernetes (or infrastructure) network policies, the benefits are even greater, including the ability to secure pod-to-pod or service-to-service communication at the network and application layers.

https://istio.io/latest/docs/concepts/what-is-istio/#security

Observability

Another important aspect that Istio helps with is observability. It helps managing tracing, monitoring, and logging. Additionally, it provides a set of dashboards like Kiali, Grafana, Jaeger, etc to help visualize the traffic patterns and manage the services.

Additional Resources –

https://platform9.com/blog/kubernetes-service-mesh-a-comparison-of-istio-linkerd-and-consul/

https://dzone.com/articles/metadata-management-in-big-data-systems-a-complete-1